The AI Revolution: The Epic Saga of AI Breakthroughs

The field of science has gone through an enormous transformation mainly because of advancements in the field of artificial intelligence (AI), which have transformed both the technological and human innovative landscape. AI has accomplished incredible progress in the past couple of decades, exceeding what was previously believed to be conceivable. From vision technologies and automation to machine learning and the processing of natural language, these technological advances encompass an extensive spectrum of prospective implications. As researchers and technologists keep on expanding beyond the limits of AI, these developments not only improve our comprehension of the discipline but also fundamentally impact economies as a whole, business sectors, and the means by which we communicate with technologies on an everyday basis. Notable developments in computer vision and natural language processing are among these discoveries. In addition to highlighting artificial intelligence's great promise, this period of scientific discoveries presents significant ethical, social, and scientific research challenges.

Machine Learning Mastery

Author, instructor, and machine learning practitioner Jason Brownlee founded the well-known platform "Machine Learning Mastery." The platform's goal is to assist people learn and become proficient in machine learning principles and techniques by offering them tools, lessons, and advice.

Key features of Machine Learning Mastery

- Books and Courses: A vast array of machine learning subjects are covered in the books and online courses that Jason Brownlee has produced. These tools make it easy for readers and learners to use machine learning algorithms step-by-step because they are designed to be both interactive and useful.

- Blog: The Machine Learning Mastery blog is an excellent resource for anyone interested in machine learning. It provides instructions, tutorials, and guidance on a variety of AI, deep learning, and machine learning-related subjects. Although covering more complex subjects, the information is frequently understandable for novices as well.

- Practical Focus: One of Machine Learning Mastery's advantages is that it has a strong emphasis on real-world applications. Jason Brownlee frequently offers walkthroughs and code examples for well-known machine learning libraries like TensorFlow and scikit-learn. This practical method aids students in applying abstract ideas to practical issues.

- Newsletter: Machine Learning Mastery offers a newsletter that provides subscribers with updates on new articles, tutorials, and resources. This helps the community stay informed about the latest developments in the field.

- Community Support: Machine Learning Mastery has a community forum where learners can connect, ask questions, and share their experiences. Community support can be valuable for those navigating the challenges of learning machine learning.

- Effective Communication: Jason Brownlee has become known for having the ability to effectively convey difficult ideas in an easily understood and straightforward way. The subject matter is comprehensible to a broader audience, which includes individuals with different degrees of expertise and competence, owing to this method of communication.

With all things taken into account, anyone who wants to pick up useful knowledge regarding machine learning is going to discover Machine Learning Mastery to be a tremendous reference. Irrespective of your professional expertise level, the platform's website offers opportunities that will enhance your comprehension and competency in the subject matter.

Cracking the Language Code: NLP Breakthrough Wonders.

- Transformer Models: Taking advantage of transformer mathematical models, particularly BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pre-trained Transformer), proves to be very advantageous for NLP. These mathematical frameworks have produced groundbreaking outcomes on several kinds of tasks related to NLP, such as categorization of texts, the identification of named entities, and translation into other languages.

- Transfer Learning: Transfer learning has become routine practice, especially when it comes to pre-training huge language models on enormous datasets and fine-tuning them for particular applications. The use of this method has shown to be successful in strengthening the effectiveness of NLP mathematical models, especially when there is not sufficient information with labels accessible to perform a particular assignment.

- Multimodal Natural Language Processing (NLP): As frameworks are being created for analyzing and generating data from multiple mediums, such as written words, audio recordings, and images, this branch of computing is growing more and more commonplace. As a result, activities like photo captioning—where models produce language-based explanations for images—have developed.

- Few-Shot Learning: GPT-3 and related models have proven to be quite effective at few-shot learning. These models demonstrate the ability to generalize pre-training information to new tasks by demonstrating their ability to complete tasks with little examples or directions.

- Fair and Ethical NLP: Scholars and practitioners in NLP have been concentrating more on rectifying language model biases and making sure that NLP applications take ethics into account. In addition to developing rules for responsible AI development, efforts have been undertaken to construct models that are more impartial and fairer.

- Efficiency Improvements: There have been efforts to make large language models more efficient in terms of computation and resource requirements. This includes model distillation techniques, quantization, and designing smaller variants of transformer models that still maintain good performance.

- Domain-Specific Models: To enhance performance on specialized activities within particular domains, such as legal, medical, or scientific, customized language models have been developed. Frequently, these models are refined using datasets unique to a given domain.

- Conversational and Interactive AI: Advancement has been accomplished when constructing conversational machines with higher levels of intelligence and awareness of their surroundings. Increasingly sophisticated models are currently being created to fully understand natural language interactions and to respond with solutions that make sense more effectively and complement with the circumstance.

Visual Wonders: Unraveling Computer Vision's Latest Achievements

- CNNs, or convolutional neural networks: The field of computer vision has not been comparable without CNN programs. State-of-the-art performances have been accomplished through models like AlexNet, the VGG, ResNet, and Efficient Network in tasks which include the process of segmentation identification of objects, and picture classification. These architectures have been the foundation for various computer vision applications.

- ImageNet Large Scale Visual Recognition Challenge: For assessing and developing image classification algorithms, the ImageNet competition has served as a standard. In computer vision research, the breakthrough achievement of greatly enhanced accuracy on ImageNet tasks by deep learning models, especially CNNs, signaled a sea change.

- Semantic Segmentation: Giving each pixel in an image a class is the aim of semantic segmentation tasks. In this field, deep learning models with impressive performance like FCN (Fully Convolutional Network) and U-Net have been shown. This has applications in medical picture processing, scenario understanding, and autonomous driving.

- Generative Adversarial Networks (GANs): GANs have been demonstrated effective for applications pertaining to computer vision such as image-to-image translation, aesthetic advancement, and graphic output. Leading GAN concepts, such as StyleGAN, have been demonstrated to be exceptionally good at generating genuine and images of outstanding quality.

- Transfer Learning: Prior to training networks on large amounts of data and then adjusting them for particular use cases is an established methodology. Computer vision models have been successful because of transfer learning, which enables them to generalize well to new tasks with little labeled data.

- 3D Computer Vision: Advances in 3D computer vision have enabled new techniques for reconstruction, 3D object recognition, and depth estimation conceivable. These advancements can help augmented reality, virtual reality, and robotics.

- Effective Models: Creating models that are more computationally effective without sacrificing performance has been a priority. In scarce resource situations, frameworks such as the EfficientNet and the MobileNet are potentially advantageous considering that they can get adequate precision with a smaller number of parameters.

- Robustness and Adversarial Defense: Developers have been trying to make models based on machine vision stronger in response to assaults from adversaries. Model comprehension and conflicting instruction are two approaches that have been investigated as strategies to get beyond deep machine learning networks' weaknesses.

- Real-Time Applications: Improvements in artificial intelligence (AI) have opened the way for the emergence of applications that operate immediately such as gesture authentication, facial recognition, and augmented reality (AR). In modern times, these kinds of apps are crucial aspects of many businesses, such as privacy, gaming, and medicine.

The Impact of Quantum Computing on AI

Listed below are some conceivable methods by which AI may be affected by quantum computing:

- Accelerating Machine Learning Algorithms: There may be a chance that algorithms for machine learning computations could potentially be considerably improved up by quantum computing.

- Quantum Machine Learning: Quantum machine learning is a cross-disciplinary discipline that brings together machine learning and quantum computer technology.For activities like solving specific kinds of machine learning models, doing linear algebraic operations, and addressing optimization problems, quantum algorithms may be especially useful.

- Enhancing Optimization: The intrinsic capacity of quantum computing to investigate several options at once may be helpful for optimization issues, which are prevalent in artificial intelligence. Finding optimal solutions more quickly than with classical algorithms is the goal of quantum algorithms such as the Quantum Approximate Optimization Algorithm (QAOA).

- Improved Search Algorithms: Quantum computers may excel in certain search problems. Grover's algorithm, for instance, provides a quadratic speedup over classical algorithms for unstructured search problems. This could have implications for tasks like database searching or optimization.

- Simulating Quantum Systems: Quantum computers are naturally suited for simulating quantum systems, and some AI applications involve the simulation of quantum phenomena. Quantum machine learning models could potentially benefit from more accurate simulations of quantum systems, leading to improved AI capabilities.

- Security and Cryptography: Quantum computing presents security and cryptography issues to traditional cryptographic systems, but it also creates new opportunities for quantum-safe encryption. This might influence the privacy of artificial intelligence systems, especially if the use of quantum computers becomes increasingly prevalent.

- Hybrid Quantum-Classical Models: Researchers are conducting research exploring the possibility of hybrid quantum-classical models, which means that particular machine learning models execute on quantum computers while others operate using classic. This combination of methods attempts to integrate the beneficial characteristics inherent in conventional and quantum computer technology.

- Addressing Big Data Challenges: Quantum computers may provide solutions to certain big data challenges by efficiently processing and analyzing large datasets. Improvements with regard to handling enormous quantities of data might stem from quantum parallelism and entanglement.

It's important to emphasize that large-scale, functioning quantum computer technology continues to be in the beginning stages and confronts significant technological barriers. It will be expected that as the technology for quantum computers expands, AI will continue to be progressively influenced. The prospective interconnections between AI and quantum computing have been thoroughly investigated by researchers in an attempt to further develop competences and improve their effectiveness in addressing challenging challenges.

Code with a Conscience: Ethics and Responsibility in Artificial Intelligence

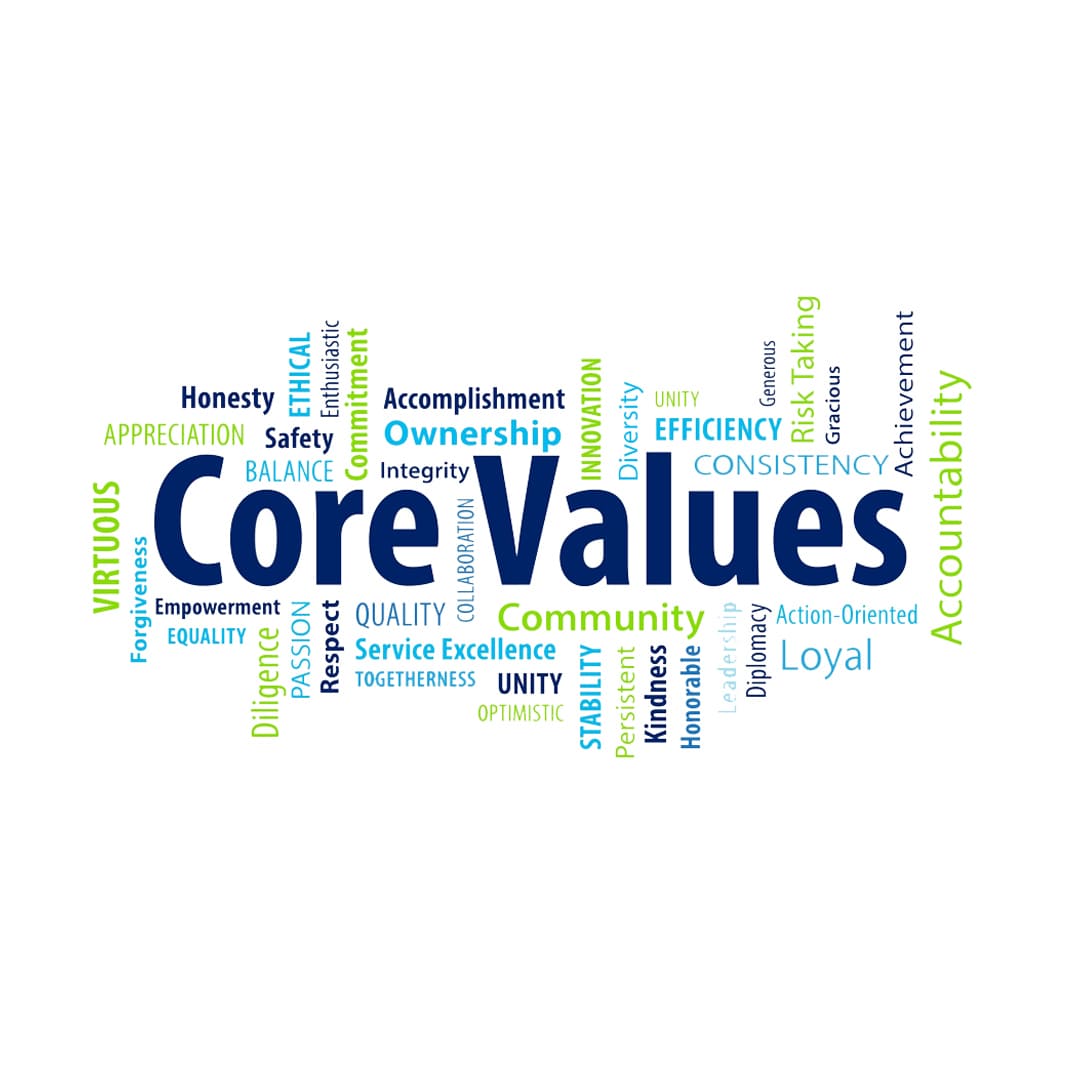

In order to design and execute artificial intelligence networks, principles of ethics along with accountable AI procedures are necessary. Considering issues of ethics increasingly essential as artificial intelligence (AI) technologies expand more deeply embedded in every aspect of civilization. Here are key considerations:

- Fairness and Bias: Pre Existing prejudices observed in training data may be inadvertently reinforced or amplified by artificial intelligence systems. To guarantee equitable and appropriate results for every person, bias must be recognized and reduced.

- Transparency: AI models may operate as complex "black boxes," making it challenging to understand how they make judgments. AI systems need to stay transparent and easy to understand if they are to build trust and offer insight into the decision-making process.

- Privacy: Since AI systems typically use big datasets, protecting people's privacy is crucial. It is important to ensure compliance with privacy regulations, putting strong data protection mechanisms and data anonymization in place.

- Security: Artificial intelligence models are highly susceptible to adversary attacks, which take advantage of fraudulent actors modifying input information in order to mislead the machine's algorithms. Putting safety procedures up and running is essential for avoiding such kinds of acts of violence, particularly among essential sectors like healthcare and finance.

- Accountability: It's critical to clearly define who is responsible for the creation, application, and results of AI systems. This entails determining who is at fault and making sure there are procedures in place for dealing with mistakes or unforeseen effects.

- Informed Consent: Obtaining informed permission becomes crucial when AI systems have a direct influence on people. Clients ought to have insight into how their personal information is being utilized and what might be the consequences associated with choices taken via artificial intelligence.

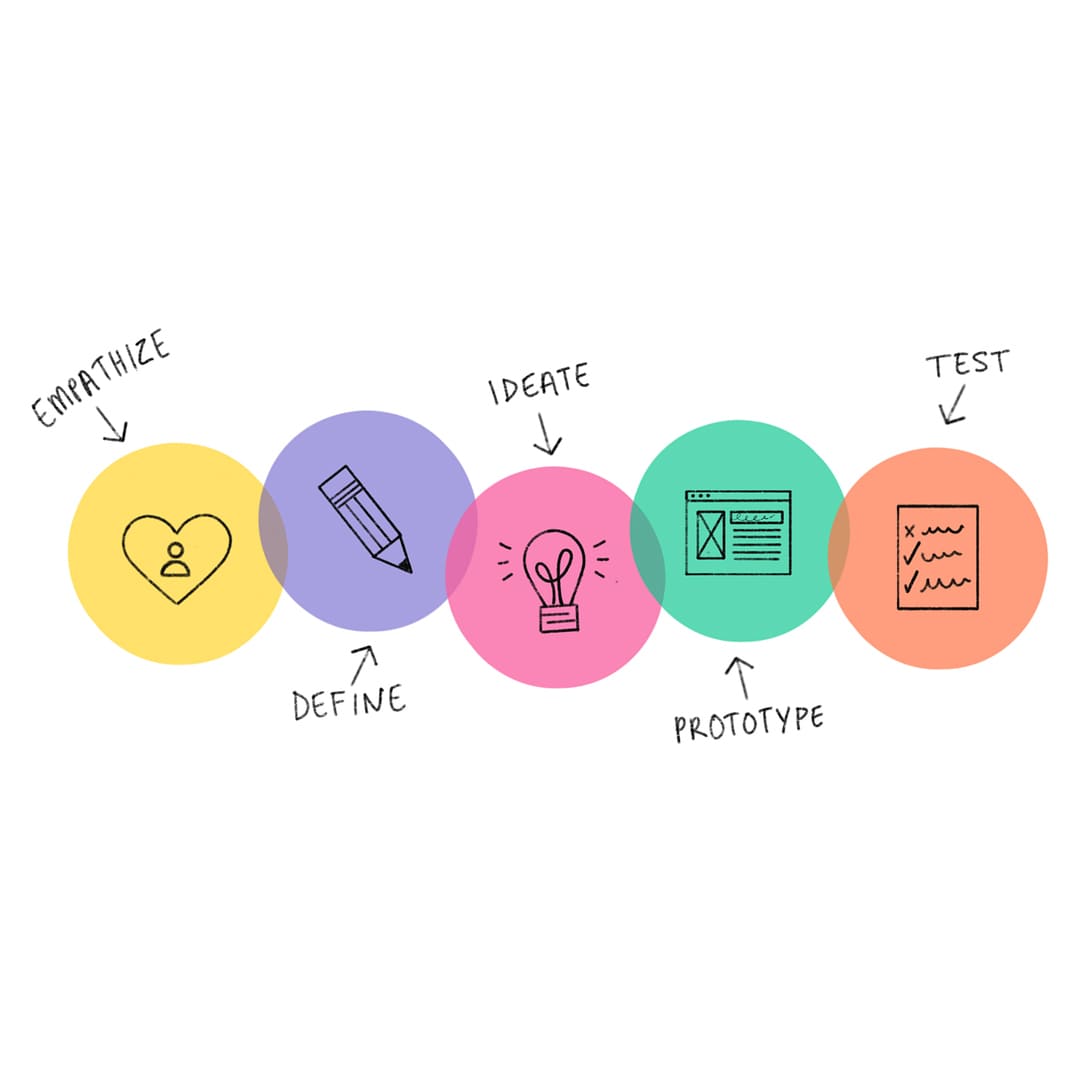

- Human-Centered Design: By incorporating stakeholders and end users at every stage of the conceptualization and manufacturing process, human-centered development ensures that AI systems are in accordance with human values, prerequisites, and ambitions. More environmentally and morally sound artificial intelligence (AI) systems have been created with an understanding of human-centered principles of design.

- Robustness and Reliability: It's essential to guarantee that systems based on artificial intelligence (AI) are both resilient and trustworthy, especially when dealing with circumstances in which mistakes might have experienced catastrophic repercussions. Implementing fail-safe mechanisms and continuous monitoring is important.

- Environmental Impact: Large-scale AI models and training processes can have a significant environmental impact. Developing energy-efficient algorithms and adopting responsible computing practices help mitigate these concerns.

- Global Aspects: When developing AI systems, cultural diversity should be taken into consideration. Preconceived notions are capable of being minimized while welcoming and customizable artificial intelligence solutions can be guaranteed in an assortment of circumstances by acknowledging and taking into account cultural variances.

- Continuous Assessment and Auditing: AI systems ought to undergo periodic evaluations and audits in order to guarantee that their operations keep complying with ethical standards immediately after they have been put into practice. This is especially crucial since real-world situations can provide new difficulties or biases for systems.

AI ethics are a developing field, and politicians, technologists, ethicists, and other stakeholders must work together to responsibly create and implement AI systems. AI aids society by minimizing possible downsides when it complies with ethical standards and rules. Organizations must take a proactive and moral stance across the whole AI development life cycle.

Unleashing Genius: DeepMind's Latest Leaps in AI Excellence

Following are a few notable developments and breakthroughs of DeepMind:

- MuZero and General Reinforcement Learning: DeepMind developed the MuZero model, a system competent of comprehending challenging games without precisely comprehending the rules that govern them. MuZero generated modern facilities outcomes throughout multiple games, showcasing its capacity to acquire knowledge and organize in a wide variety of circumstances.

- Artificial Intelligence for Protein Structure Prediction: DeepMind maintained in employing AI for biological purposes after the achievement of AlphaFold. The organization is currently collaborating on collaborative projects as well as research initiatives with the goal of using artificial intelligence to better understand and estimate the structure and function of materials.

- AI for StarCraft II: In the extremely difficult real-time management game StarCraft II, DeepMind's AI representatives, including AlphaStar, exhibited extraordinary capability. The machines demonstrated the limitless potential of artificial intelligence in complex and uncertain scenarios by displaying resilient methods and contemplative behavior.

- Climate science and artificial intelligence: DeepMind is currently looking into prospective applications for artificial intelligence in this field of research. This includes anticipating the climate and weather employing artificial intelligence technologies with the goal to better understand the implications of changing the climate.

To sum up, the constant flow of breakthroughs in artificial intelligence heralds a revolutionary age that is pushing humankind into previously unexplored technological frontiers. Besides the realms of technology and science, these developments have profound consequences because they indicate the potential of robotics learning, adapting, and even manufacturing. The combination of artificial intelligence and human creativity together have the power to enhance our quality of life, provide solutions for complicated issues, and boost performance across a broad spectrum of industries. However, these improvements additionally generate moral and ethical issues that need to be properly addressed. It is crucial that we welcome these developments with a careful balance between progress and ethical considerations as we navigate the future created by AI advancements, ensuring that the advantages of artificial intelligence are harnessed for the greater good of humanity. The field of artificial intelligence is still in its infancy, but its progress holds great promise for a time when human-machine cooperation will provide previously unheard-of breakthroughs and constructive social change.

Recent Stories

500k Customer Have

Build a stunning site today.

We help our clients succeed by creating brand identities.

Get a Quote