Web Development for Scalability and Performance

In the always growing advanced scene, where client assumptions take off and contest heightens, the significance of web improvement for versatility and execution couldn't possibly be more significant. As organizations endeavor to convey consistent and responsive internet based encounters to their clients, the capacity of a site or web application to deal with expanding loads and keep up with ideal execution becomes central. Adaptability guarantees that the framework can nimbly oblige development, while execution streamlining ensures quick responsiveness considerably under weighty traffic. In this period where milliseconds can represent the moment of truth client commitment, becoming the best at versatile and performant web advancement is vital for associations planning to flourish in the computerized domain.

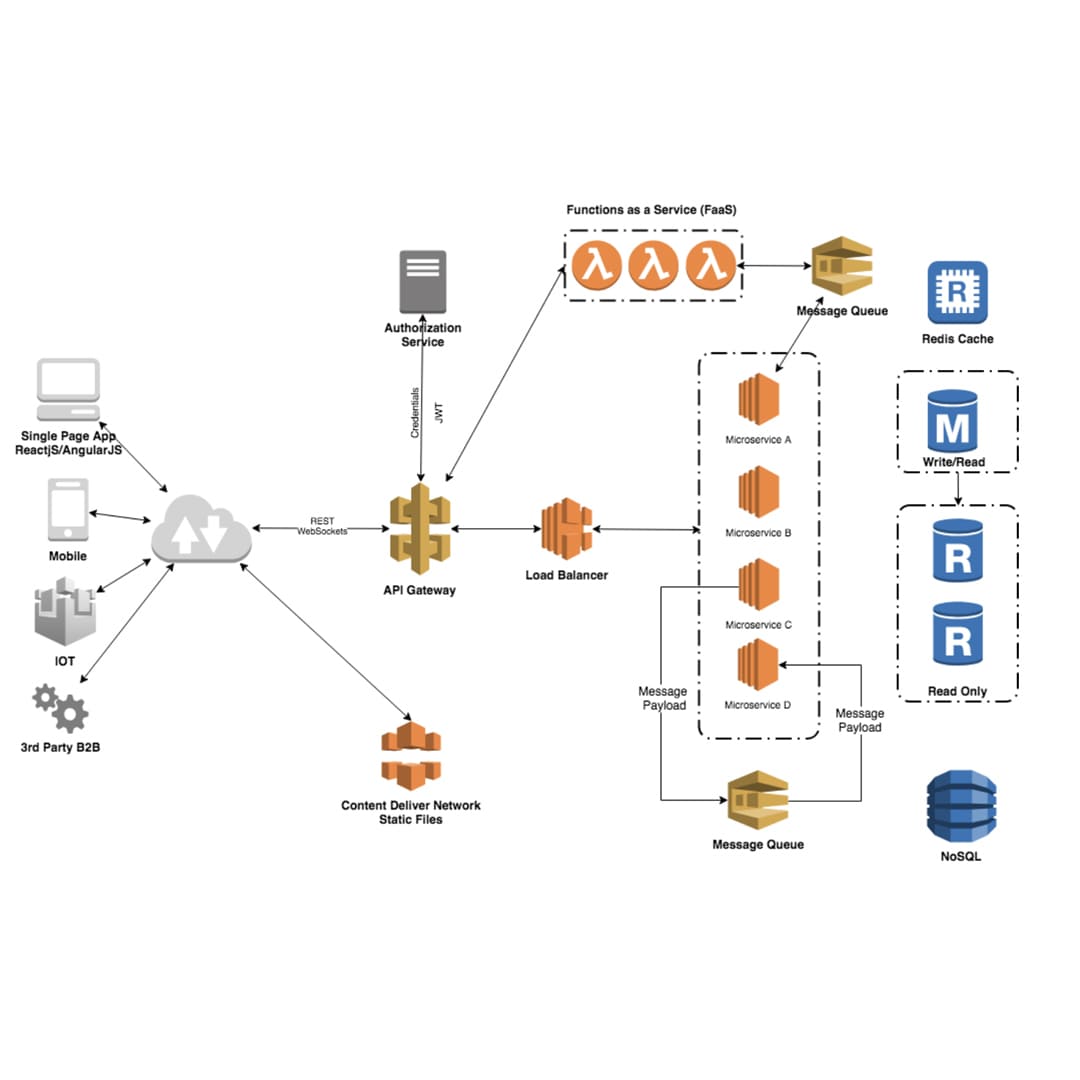

Scalable Architecture Patterns

Versatile engineering designs are plan standards and approaches that empower a framework to deal with expanding burdens and development without forfeiting execution or dependability. Here are some famous adaptable engineering designs:

Microservices Design:

Deteriorate the application into little, inexactly coupled administrations, each answerable for a particular capability or space.

Each assistance can be freely evolved, conveyed, and scaled, permitting groups to simultaneously chip away at various pieces of the framework.

Empowers better issue disengagement and versatility since each assistance can be scaled autonomously in light of its interest.

Serverless Design:

Construct applications utilizing serverless processing stages like AWS Lambda, Purplish blue Capabilities, or Google Cloud Capabilities.

Capabilities are set off by occasions and naturally scaled in view of interest, with compelling reason needed to oversee servers or framework.

Ideal for occasion driven and stateless jobs, yet may have impediments for long-running or asset concentrated undertakings.

Occasion Driven Engineering (EDA):

Utilize non concurrent informing and occasions to decouple parts and empower free coupling between administrations.

Administrations impart through occasions, empowering better versatility, adaptation to internal failure, and responsiveness.

Occasion driven frameworks are in many cases assembled utilizing message merchants like Kafka, RabbitMQ, or AWS SNS/SQS.

Flat Scaling (Scale-Out):

Add more cases of servers or administrations to appropriate the heap equitably across numerous machines.

Load balancers disperse approaching solicitations among accessible occurrences, guaranteeing high accessibility and versatility.

Requires stateless administrations or systems to oversee meeting state remotely (e.g., utilizing tacky meetings or meeting replication).

Information base Sharding:

Segment the data set into more modest, more reasonable shards in light of specific models (e.g., client ID, geographic district).

Disperse information across various data set cases or groups, decreasing the heap on individual data sets and empowering flat scaling.

Requires cautious preparation and execution to guarantee information consistency and productive questioning across shards.

Caching:

Use reserving components to store oftentimes got to information or processed results nearer to the application, lessening the need to get information from backend administrations or data sets.

Use dispersed reserving arrangements like Redis or Memcached for high accessibility and adaptability.

Store negation techniques are fundamental to guarantee information consistency and forestall flat information.

Containerization and Organization:

Bundle applications and their conditions into compartments utilizing advancements like Docker.

Arrange containerized applications utilizing apparatuses like Kubernetes, Docker Multitude, or Amazon ECS to robotize sending, scaling, and the board.

Compartments give consistency across improvement, testing, and creation conditions, while arrangement works on the administration of enormous scope organizations.

Versatile Burden Adjusting:

Utilize versatile burden balancers that can naturally increase or down in light of approaching traffic and request.

Disperse approaching solicitations across various servers or administrations, guaranteeing high accessibility, adaptation to non-critical failure, and adaptability.

Cloud suppliers offer oversaw load offsetting administrations with highlights like auto-scaling, wellbeing checks, and traffic directing.

These versatile design examples can be joined and custom-made to meet the particular necessities and limitations of your application, guaranteeing that it can develop and adjust to changing requests while keeping up with execution and unwavering quality.

Load Balancing Techniques

Load adjusting strategies are utilized to convey approaching organization traffic across numerous servers or assets to guarantee ideal usage, expand throughput, limit reaction time, and keep up with high accessibility. Here are some normal burden adjusting methods:

Round Robin Load Balancing:

Incoming requests are sequentially distributed across a pool of servers in a circular order.

Simple and easy to implement.

Does not take server load or capacity into account, which can lead to uneven distribution if servers have different capabilities.

Weighted Round Robin Load Balancing:

Like cooperative effort, however doles out a load to every server in view of its ability or execution.

Servers with higher loads get more demands contrasted with servers with lower loads.

Useful for balancing load across servers with different capabilities or capacities.

Least Connection Load Balancing:

Approaching solicitations are directed to the server with the least dynamic associations.

Guarantees that the heap is equitably conveyed among servers in light of their ongoing responsibility.

Ideal for extensive associations where server limits might change over the long haul.

IP Hash Burden Adjusting:

Utilizes a hash capability in view of the client's IP address to figure out which server to send the solicitation to.

Guarantees that solicitations from a similar client are constantly steered to a similar server, helpful for meeting perseverance.

Can prompt lopsided dissemination on the off chance that the quantity of clients isn't equally dispersed across IP addresses.

Least Response Time Load Balancing:

Routes requests to the server with the lowest response time or latency.

Requires monitoring of response times and may introduce overhead for real-time decision making.

Ensures that requests are sent to the server that can respond the fastest, improving user experience.

Versatile Burden Adjusting:

Progressively changes the heap adjusting calculation in light of ongoing server wellbeing and execution measurements.

Screens server load, reaction times, and different variables to settle on informed conclusions about demand steering.

Helps prevent overloading of servers and ensures efficient resource utilization.

Content-Based Load Balancing:

Routes requests based on the content of the request or specific attributes (e.g., URL path, HTTP headers).

Useful for applications with different types of content or services that require specialized handling.

Allows for granular control over request routing based on content characteristics.

Geographic Load Balancing:

Courses solicitations to servers in light of the geographic area of the client.

Guarantees that solicitations are served from servers that are geologically nearer to the client, lessening inertness.

Helpful for worldwide applications with conveyed server foundation.

Health Check Load Balancing:

Screens the wellbeing and accessibility of servers utilizing wellbeing checks (e.g., TCP checks, HTTP checks).

Courses demands just to sound servers, staying away from servers that are encountering issues or free time.

Keeps up with high accessibility and dependability of the application.

By utilizing these heap adjusting strategies, you can guarantee that your application can proficiently convey approaching traffic across numerous servers or assets, further developing execution, dependability, and adaptability.

Caching Strategies for Web Applications

Storing is a pivotal methodology for working on the exhibition and versatility of web applications by decreasing the need to over and over recover or bring information from the first source. Here are some reserving systems ordinarily utilized in web applications:

Page Caching:

Cache entire HTML pages to serve them directly to users without executing backend logic or generating dynamic content.

Can be implemented using in-memory caching solutions like Redis or Memcached or by using caching mechanisms provided by web servers or CDNs.

Fragment Caching:

Cache specific parts or fragments of a page that are expensive to generate or compute.

Useful for caching dynamic content within a page, such as user-specific data or frequently accessed widgets.

Requires more granular caching mechanisms compared to page caching, often implemented using caching libraries or template-level caching.

Object Caching:

Cache individual objects or data structures retrieved from databases or external services.

Helps reduce the overhead of fetching and processing data by storing frequently accessed objects in memory.

Can be implemented using in-memory caches like Redis, Memcached, or distributed caching libraries like Hazelcast.

Query Result Caching:

Cache the results of database queries to avoid executing the same query multiple times.

Effective for read-heavy applications where certain database queries are frequently repeated.

Requires careful invalidation mechanisms to ensure that cached query results remain consistent with the underlying data.

HTTP Caching:

Influence HTTP reserving instruments to store reactions at the client, mediator intermediaries, or CDN edge servers.

Uses HTTP headers, for example, Store Control, Terminates, and ETag to control reserving conduct.

Diminishes server burden and organization idleness by serving stored reactions straightforwardly from client or intermediary reserves.

Content Conveyance Organization (CDN) Reserving:

Store static resources (e.g., pictures, CSS, JavaScript records) on conveyed edge servers of a CDN.

Further develops execution by serving content from geologically nearer servers to clients, diminishing inactivity.

CDNs frequently give configurable storing strategies and reserve refutation instruments to control content newness.

In-Memory Storing:

Stores regularly got information in memory to decrease idleness and further develop reaction times.

In-memory reserving arrangements like Redis or Memcached offer elite execution key-esteem stores for putting away and recovering stored information.

Appropriate for storing meeting information, client confirmation tokens, or any information that should be gotten to rapidly and habitually.

Cache Invalidation Strategies:

Implement mechanisms to invalidate or expire cached data when it becomes stale or outdated.

Use techniques such as time-based expiration, cache invalidation events, or versioning to ensure data consistency.

Balancing between cache retention and freshness is crucial to maintain performance while ensuring data integrity.

Cache-Control Policies:

Characterize reserve control headers to indicate storing orders and control how content is reserved at different levels (client, intermediary, CDN).

Use mandates like max-age, no-reserve, no-store, and must-revalidate to indicate storing conduct and newness necessities.

Calibrate store control approaches in light of the idea of content and application necessities.

By utilizing these reserving methodologies really, web applications can fundamentally further develop execution, decrease server burden, and upgrade versatility, prompting a superior generally client experience.

Continuous Monitoring and Performance Optimization

Consistent checking and execution improvement are fundamental practices for keeping up with the wellbeing, unwavering quality, and effectiveness of web applications over the long haul. Here is an organized way to deal with constant observing and execution improvement:

Characterize Key Execution Markers (KPIs):

Distinguish and characterize the key measurements that are basic to your application's exhibition and client experience. This might incorporate reaction time, throughput, mistake rates, asset usage, and client fulfillment measurements.

Set benchmarks and focuses for each KPI to lay out execution objectives and screen progress over the long haul.

Carry out Checking Apparatuses:

Convey checking apparatuses and frameworks to gather and examine execution information continuously. This might incorporate application execution observing (APM) apparatuses, logging structures, server checking arrangements, and engineered checking instruments.

Screen both foundation level measurements (e.g., computer chip use, memory utilization, network traffic) and application-level measurements (e.g., reaction times, exchange throughput, blunder rates).

Nonstop Execution Testing:

Coordinate execution testing into your consistent combination and conveyance (CI/Album) pipeline to identify execution relapses from the get-go in the advancement cycle.

Lead normal burden testing, stress testing, and adaptability testing to distinguish bottlenecks, execution limits, and asset limitations.

Use instruments like JMeter, Gatling, or Insect for load testing and profiling apparatuses like YourKit or VisualVM for breaking down application execution.

Constant Alarming and Occurrence The board:

Arrange making components aware of advising pertinent partners when execution measurements surpass predefined limits or peculiarities are distinguished.

Carry out episode reaction methodology and acceleration conventions to address execution issues quickly and limit free time.

Use unified logging and checking stages to total logs and measurements from various parts of the application stack for extensive perceivability.

Execution Profiling and Improvement:

Use profiling apparatuses to recognize execution areas of interest, slow data set questions, wasteful calculations, and asset concentrated tasks in your codebase.

Streamline basic code ways, data set inquiries, and asset use to further develop productivity and lessen reaction times.

Apply best practices for execution streamlining, for example, reserving, sluggish stacking, code refactoring, information base ordering, and algorithmic upgrades.

Scope organization and Adaptability:

Perform scope organization activities to conjecture future development and asset prerequisites in view of authentic information, utilization examples, and business projections.

Guarantee that your framework, data set, and application design can scale evenly and in an upward direction to deal with expanding burdens and client simultaneousness.

Computerize provisioning and scaling processes utilizing cloud arrangement instruments (e.g., Kubernetes, Terraform) to adjust to changing interest progressively.

Client Experience Observing:

Screen client experience measurements, for example, page load times, exchange finish rates, and change rates to check the effect of execution on client fulfillment and business results.

Gather client input, direct convenience testing, and examine client conduct to recognize regions for development and focus on execution enhancements appropriately.

Constant Learning and Improvement:

Encourage a culture of nonstop learning, trial and error, and improvement inside your turn of events and tasks groups.

Lead post-mortems and underlying driver investigation for execution episodes to distinguish examples learned, carry out remedial activities, and forestall repeat.

Remain informed about arising patterns, advances, and best practices in execution improvement through gatherings, discussions, and industry distributions.

By following these practices for ceaseless checking and execution improvement, you can proactively distinguish and address execution issues, streamline asset usage, and convey a quick, solid, and versatile web application that meets the developing necessities of your clients.

All in all, the quest for versatility and execution in web improvement isn't only a specialized thought yet an essential basis in the present computerized scene. As the interest for quicker, more solid, and adaptable web encounters keeps on rising, organizations should focus on these angles to stay cutthroat. By putting resources into a hearty framework, taking on versatile engineering, and carrying out execution improvement procedures, associations can not just meet the developing necessities of their clients yet additionally open new doors for development and advancement. At last, in the tireless quest for greatness, web improvement for versatility and execution remains as a foundation for outcome in the dynamic and consistently developing web-based environment.

Recent Stories

500k Customer Have

Build a stunning site today.

We help our clients succeed by creating brand identities.

Get a Quote